The capabilities of modern artificial intelligence often seem like acts of magic. From recognizing images to driving vehicles, AI is transforming our world at an astonishing speed. However, this “magic” comes at a cost: most of these systems, based on artificial neural networks like LLMs, operate as “black boxes,” offering answers without explicit explanation of their inner reasoning. Phenomena such as hallucinations and inherent vulnerabilities through prompt injection, among others, make LLMs (and other GenAI tools) unfeasible for reliable decision making.

Since the beginning of AI history (back to the 50s at the Dartmouth Conference), explainable AI models have attracted the attention of researchers by their applicability to determinant processes. Reliability and traceability are mandatory for automating and applying an intelligent algorithm to the control of crucial processes. Let’s define a simple question: Would you trust an LLM-based decision maker to drive you home or control the power supply of a city? Or even, would you be sure that the decisions taken into your company are the best if they are not explained? Explainable AI tackles these problems offering a bridge through the black boxes abyss. Its mission is to make AI reveal the “how” and “why” behind its decisions.

Looking from a legal perspective, the need for transparency in AI has transcended this technical and ethical debate. Specifically, in 2019, the European Union established a legal right for individuals to access “meaningful information about the logic behind automated decisions using their data.” This regulation, framed in the General Data Protection Regulation (GDPR), is pioneer worldwide.

The importance of this fact cannot be overstated. It signals a fundamental shift in which the accountability of automated systems is being codified into law. This empowers individuals to demand clarity and compels organizations to be accountable for the algorithms they implement, especially those that make decisions that profoundly affect people’s lives.

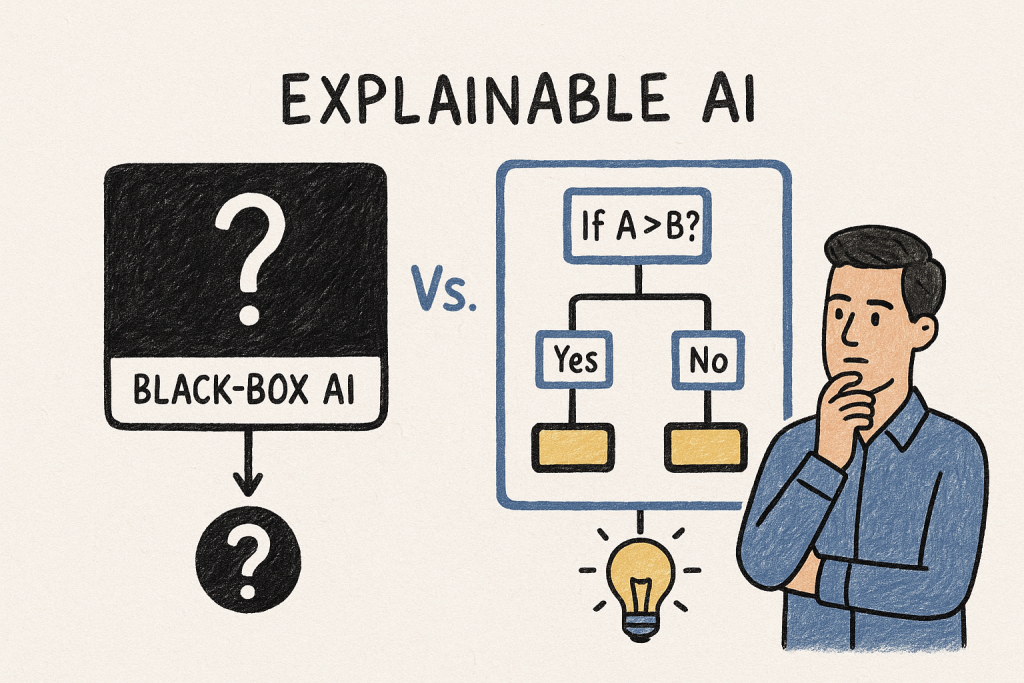

Overall, explainable AI systems can be classified into two broad families:

Inherently interpretable models are explainable by design. Their reasoning follows explicit logical structures—“If A, then B”—so that every inference can be traced back to well-defined rules. Decision trees, Bayesian networks, and fuzzy logic are examples of this approach. Their transparency makes them uniquely valuable: anyone, even without a deep technical background, can understand how they arrive at a conclusion. For this reason, they are regarded as the most reliable option for high-stakes decisions, such as in finance, healthcare, or critical infrastructure. In these domains, clarity and verifiability are not just desirable features; they are non-negotiable. When lives, resources, or safety are on the line, black-box systems simply cannot offer the same level of accountability.

Post-hoc interpretability, in contrast, seeks to explain the outputs of opaque models after the fact. These methods don’t open the black box but probe it to approximate its behavior. Approaches such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help identify which inputs were most influential in a given decision. Visual tools for convolutional neural networks—like saliency maps or Class Activation Mapping—provide another lens, highlighting the pixels or regions that guided a model’s prediction. While powerful, these methods only offer partial insights and cannot guarantee full traceability.

And yet, despite the strengths and proven reliability of inherently interpretable models, today’s spotlight shines almost exclusively on large language models (LLMs) and other neural-based systems. Why is that? The answer lies in what each family of models is designed to do. Interpretable models are precise, trustworthy, and transparent, but they struggle when facing the chaos of unstructured data. LLMs, by contrast, thrive in exactly that domain: they generate fluent text, adapt to ambiguity, and uncover patterns too complex for symbolic rules. Their success explains the current fascination with them—but it also exposes their greatest weakness: their reasoning remains hidden, their decisions unverifiable.

In the long-term, the smartest approach is not to bet everything on one technology. Instead, it’s about understanding the unique strengths of each AI model and applying the right tool for the job. Use LLMs for generative tasks where creativity is key, and rely on explainable models when transparency and accountability are non-negotiable. As AI becomes integrated into our daily lives, from medical diagnoses to financial decisions, the ability to understand and trust its reasoning is no longer a luxury but a necessity. The development of explainable AI methods is not a technical curiosity but a fundamental pillar of a future where AI is a truly reliable tool. A deep knowledge of the limitations of each AI method allows us to surgically apply them to the right tasks, paving the way toward a truly intelligent future.At Nuxia, we envision a future of digital work where humans focus on strategic, meaningful tasks while automation handles routine administrative duties and the supervision of key systems. We are creating and marketing our Digital Workers—highly specialized AI agents designed to automate critical tasks. These agents can process massive amounts of information around the clock, which is especially useful for tasks like surveillance, marketing, or customer support. Each of our Digital Workers is equipped with the AI method, or combination of methods, that best fits the specific task. This ensures they are not only impactful for our clients but also compliant with EU regulations on traceability and security.